Generative AI and LLM-powered search are the top disruptors of SEO in 2025, and optimizing for AI Overviews in 2026 will require adapting content to how language models select and cite authoritative sources.

The way we search has changed. Most of us no longer type a few words into a search box — we ask complete questions and expect clear, concise answers. According to BrightEdge (March 2025), more than 13 percent of desktop searches already feature Google AI Overviews, proving how fast this shift is happening.

Now imagine that your website doesn’t just appear in results — it’s quoted or summarized by an AI-driven search engine.

That’s the idea behind LLM SEO: optimizing for large language models that power modern search experiences, not just for classic keyword rankings.

By understanding what LLM is, how large language modules reshape search, and how to optimize content for them, you’ll be ready for the next generation of discovery.

What is LLM SEO and Why It Matters

So, what is an LLM? In simple terms, a large language model (LLM) is an AI system trained on massive amounts of text and data. It can interpret natural language, recognise entities and relationships, and generate coherent responses.

If you have been tracking SEO trends lately, you have probably noticed one major shift — users are getting answers directly from AI-driven search engines like Google AI Overviews, Bing Copilot, or Perplexity. These systems no longer just show links. They create responses that summarize, cite, and sometimes complete the user’s journey without the need to click through.

That’s where LLM SEO comes in. It’s about earning visibility inside those AI-generated answers. The goal is not just to rank but to become the trusted citation an AI engine references.

Key Takeaway: LLM SEO = Entity Clarity + Extractable Structure + Citation Trust.

According to Google Search Central, there are no special tags or codes you need to appear in AI features. What matters is ensuring your content is people-first, well-structured, and easily understandable by both humans and machines. In short, the easier it is for AI to extract and summarize your information, the more likely it is to feature your brand as a source.

Understanding LLMs and AI-Driven Search

Large Language Models (LLMs) are the core of this shift. These models learn from massive datasets and can understand, generate, and organize information conversationally. When they answer a question, they combine retrieval (fetching facts) and generation (building a natural-language response).

Search engines now work the same way. Google’s AI Overviews and AI Mode, as well as tools like Perplexity, use these models to deliver full answers – not just lists of websites.

As Vercel explains, optimizing for this new era means writing content that’s semantically clear and structured in a way that AI systems can easily parse. If your content is logically organized, rich with entities, and factually precise, it becomes part of the AI’s reasoning chain, which makes it more likely to be cited directly in its responses.

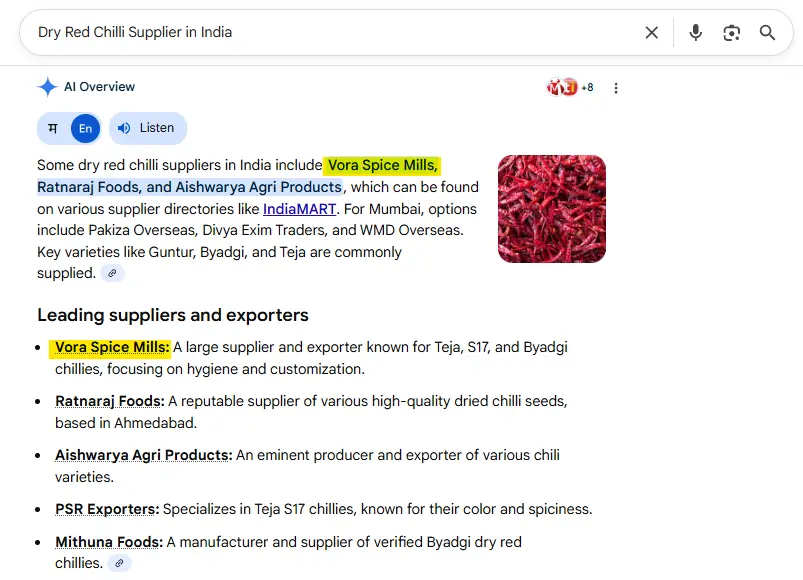

Example: A search for “Dry Red Chilli Supplier in India” in AI Mode may show a summary citing Vora Spices blog.

That happens because the content provides definitions, comparisons, and schema that the model can confidently extract.

In simple terms, you want your content to be the “building block” that an AI engine pulls from while generating its answers.

From SGE to AI Overviews to AI Mode

Google’s evolution from Search Generative Experience (SGE) to AI Overviews and now AI Mode reflects how far AI in Search has come.

| Year | Stage | Description |

| 2023 | SGE | Introduced generated answers within SERPs. |

| 2024 | AI Overviews | Mainstreamed summaries with cited sources. |

| 2025 | AI Mode | Interactive, conversational exploration powered by Gemini. |

According to Google, the same fundamentals that helped your content rank before still apply. You don’t need new meta tags. Instead, focus on high-quality writing, structured data that reflects on-page content, indexable pages, and a strong user experience.

Interestingly, Google notes that users are now asking longer and more complex questions. And although overall click-through rates may drop, the visits that come from AI Overviews or AI Mode tend to be more intent-driven and valuable. That’s why success should now be measured not just by traffic, but by the quality of engagement your content generates.

Key Takeaway: Success in AI search is measured by citation and engagement, not just traffic volume.

The Rise of Zero-Click Behaviour

The concept of zero-click search has been around for a while, but AI has accelerated it. A Search Engine Land report from March 2025 found that zero-click searches in the U.S. continued to rise year-over-year. At the same time, organic clicks dropped as more user needs were satisfied directly on Google properties.

This means fewer “easy wins” from classic rankings. For informational queries especially, AI Overviews now dominate.

Data from Semrush estimated that in March 2025, about 13% of U.S. desktop searches already featured AI Overviews. And according to BrightEdge, this number is growing fastest in knowledge-based categories — areas where people expect complete, reliable answers.

That’s why LLM SEO matters now more than ever. If your content is not structured and optimized for AI extraction, you risk becoming invisible in a search landscape where answers come before clicks.

How LLM SEO Differs from Traditional SEO, AEO, and GEO

Search optimization has always adapted to user behavior. From the early keyword-stuffed pages of the 2000s to today’s AI-generated answers, the goal has remained the same — to be visible where your audience looks for information. But the way we achieve that visibility has completely changed. The difference between traditional SEO and LLM SEO lies in the way content is processed.

To understand how, let’s compare Traditional SEO, LLM SEO, Answer Engine Optimization (AEO), and Generative Engine Optimization (GEO) — the four pillars shaping search today.

Traditional SEO vs LLM SEO vs AEO vs GEO

| Aspect | Traditional SEO | LLM SEO | AEO | GEO |

| Core goal | Rank pages in SERPs and drive organic clicks | Earn citations within AI-generated answers | Secure featured-like placements and direct answers | Optimize content for inclusion in AI-generated responses |

| Primary target | Search crawlers and ranking systems | Retrieval and summarization layers in LLMs | Answer engines in SERPs and virtual assistants | Generative systems that combine retrieval and composition |

| Content design | Keyword clusters, E-E-A-T, technical hygiene | Extractable, semantically clear, well-structured passages | Question-led sections, definitional boxes, concise how-tos | Content variants optimized through testing and data |

| Technical levers | Indexability, Core Web Vitals, structured data | SSR/SSG, JSON-LD, static HTML stability | Schema markup for FAQs, Q&A, and How-To | Experiment frameworks for inclusion rate improvement |

| Measurement | Rankings, CTR, organic sessions | AI citations, inclusion in AI Overviews/Mode | Featured snippets and answer-layer presence | Lift in generative inclusion and engagement metrics |

Why This Shift Matters

In the past, SEO was all about earning clicks. Today, it’s about earning citations. Search engines no longer just list your page; they read, understand, and summarize it.

This means your optimization strategy must focus on clarity, credibility, and structure. As Vercel points out, “Clarity beats cleverness.” Simple, well-defined sections make your content easier for AI systems to extract and summarize accurately.

Key Takeaway: SEO is no longer just about earning clicks — it’s about earning citations and trust from AI systems.

Principles That Boost LLM Visibility

Here are some proven principles to help your content stand out in an AI-first search landscape:

- Write like you teach.

Begin each topic with a short definition, then expand with examples or data. This helps AI systems extract “ready-to-quote” answers.

- Use semantic structure.

Maintain a clear heading hierarchy (H1 > H2 > H3) and use HTML elements that mirror meaning, not style.

- Align schema with visible text.

Your structured data should directly reflect what’s written on the page. Consistency increases trust for both crawlers and LLMs.

- Keep content fresh and interconnected.

Google’s AI Mode works by “fanning out” related queries. Interlinking helps your pages surface across these expanded question sets.

- Cite credible sources.

Models value information grounded in verifiable data. Use phrases like “According to Google Search Central” or “Search Engine Land reports…” to strengthen credibility.

Key Takeaway: Clarity beats cleverness — structured facts outperform creative fluff in AI summaries.

AEO: Writing Answers That Get Pulled Verbatim

Answer Engine Optimization (AEO) focuses on creating content blocks designed to be lifted directly into AI responses.

As Ahrefs and CXL guides explain, the key is writing self-contained, question-led sections.

Here’s how you can align your pages with how AI engines extract and cite content:

- Start each section with a “What is…” or “How to…” definition in one to three sentences.

- Follow with short, numbered steps or bullet points for clarity.

- Include FAQ sections that mirror natural language questions from your audience.

These tactics help answer engines — and now AI models — recognize your content as a complete, extractable unit. Think of each paragraph or block as a mini “product” that can stand alone in an answer.

The LLM SEO Checklist You Can Start Today

To turn these ideas into action, start with a simple yet powerful checklist:

- Structure for machines:

Use clean headings, semantic HTML, JSON-LD, and either server-side rendering (SSR) or static generation (SSG) for consistent parsing.

- Write for extraction:

Lead with definitions, provide concise summaries, and format how-tos or FAQs in clear, scannable patterns.

- Model your entities:

Identify and name key people, products, or concepts consistently across pages, and reflect them in schema.

- Prove your claims:

Back up statements with credible sources or internal data to strengthen trust and increase inclusion chances.

- Prioritize user experience:

Google continues to emphasize Core Web Vitals and accessibility as core eligibility factors for AI visibility.

How AI Overviews and AI Mode Pick Links

Understanding how Google selects and shows links in AI features helps you plan what to optimize.

According to Google Search Central, content becomes eligible for AI Overviews when it is indexed, useful, and previewable. In simple terms, your page should already qualify for featured snippets and have structured data that accurately represents visible content.

Google also explains that AI Overviews links can drive high-value visits because users have already seen a summarized context before clicking. This means visitors coming from these experiences often engage longer and convert better.

In AI Mode, Google introduces something called “query fan-out.” When a user asks a complex question, the system expands it into related sub-queries and retrieves multiple supporting sources. The clearer and more comprehensive your content is, the more likely it will be chosen repeatedly across these related topics within the same session.

Key Takeaway: Optimize for coverage, not just ranking — one well-structured page can feed multiple AI answers.

Measuring Success in an Answer-First World

If clicks are no longer the main indicator of visibility, what should you measure instead?

A Search Engine Land report from 2025 highlighted that zero-click behaviors are rising sharply. In this environment, success is about how often you are cited and the quality of the visits you do receive.

Here’s how to reframe your KPIs:

- AI Inclusion Rate — Track how frequently your pages are referenced in AI Overviews or other answer engines.

- AI Referrer Quality — Monitor user engagement and conversions from these experiences.

- Overlap Tracking — Compare which of your top-ranking pages also appear in AI Overviews; treat them as separate but complementary channels.

- Engagement Metrics — Use Search Console, GA4, and tools like Semrush or BrightEdge to see how AI traffic performs compared to standard organic visits.

Google itself recommends measuring value over volume. Instead of focusing only on impressions and clicks, track how these visits contribute to meaningful actions — form fills, leads, or purchases.

GEO: The Science of Generative Engine Optimization

If AEO creates extractable answers, GEO tests which formats get generated most often.

Research published on arXiv and within the KDD community treats generative engines as optimization targets. The idea is to create multiple content variations and analyze which patterns appear more often in AI responses.

You can apply this practically by:

- Test different intro formats and FAQ layouts.

- Track which variants are cited in AI experiences.

- Use insights to refine content clusters around high-value topics.

It’s similar to conversion rate optimization (CRO), but here, the goal is to improve AI inclusion rates, not clicks. GEO complements AEO by helping you continuously learn which structure, tone, or schema type improves visibility inside AI-generated answers.

Key Takeaway: Think of it as CRO for AI visibility — iterating to raise inclusion rates.

Governance: Crawlers, Previews, and Publisher Models

Governance plays a growing role in LLM SEO strategy.

Google allows you to manage how content appears in AI features through preview controls like nosnippet, max-snippet, or noindex. Using these gives you control over what part of your content can be summarized. However, stricter limits may reduce your inclusion rate.

Organizations are also reviewing permissions for AI crawlers, such as Google-Extended, which helps Gemini train and retrieve information. You can manage access through an LLMs.txt file:

User-Agent: Google-Extended

Allow: /

User-Agent: PerplexityBot

Disallow: /private/

Meanwhile, publisher programs like Perplexity Revenue Share hint at future citation-based value models.

The Future of LLM SEO

The journey from Google’s Search Generative Experience (SGE) to today’s AI Overviews shows how fast search is evolving. What began as a small test inside Google Labs in 2023 has now transformed into a global feature used by millions of users every day.

As AI systems continue to evolve, the lines between search, chat, and recommendation engines will blur. Gartner predicts that by 2026, “over 25 percent of search interactions will occur within AI-based assistants or generative engines.”

This means visibility will depend on how clearly your brand communicates knowledge, context, and credibility. Websites that invest in structured data, consistent updates, and meaningful storytelling will continue to surface in AI-driven experiences.

Preparing for the Next Evolution

The rise of AI-driven search marks a new phase for digital visibility. Instead of competing only for rankings, brands now compete for citations and credibility inside the answer layer itself. The good news is that the principles of great SEO still apply — clear structure, credible sources, and a focus on people-first content.

At Savit Interactive, we have seen how quickly the search landscape is transforming. As a leading AI SEO services company in India, we focus on strategies that help brands stay visible across both traditional and AI-driven search engines.

Our approach combines Answer Engine Optimization, Generative Engine Optimization, and structured data best practices so your content can speak directly to both people and large language models.

The world of LLM SEO is still growing, but one thing is clear: visibility now means being part of the answer. By understanding how AI interprets, summarizes, and shares information, your brand can stay one step ahead in the future of search.

Key Takeaway: Visibility now means being part of the answer.

FAQs

Q: What is LLM SEO in simple terms?

A: It’s the process of optimizing content so that AI-driven search engines can understand, retrieve, and cite it directly in their generated responses.

Q: Do you need special tags for Google AI Overviews or AI Mode?

A: No. Google Search Central confirms that standard search eligibility, structured data, and snippet readiness are enough.

Q: How is LLM SEO different from AEO and GEO?

A: AEO focuses on writing clear answers for extraction. GEO focuses on experimentation and data-driven inclusion in generative engines. LLM SEO covers both within a single strategy.

Q: How do you track AI visibility?

A: Combine Search Console data with AI inclusion trackers and analytics tools to measure engagement and conversion quality rather than just click counts.

Q: What about zero-click searches?

A: They’re increasing, but you can turn them into visibility opportunities by ensuring your brand is cited within AI responses.